Kubernetes for Micro Services

(Special thanks to Stephen Grider)

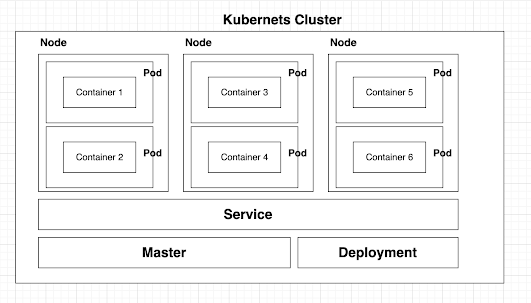

Cluster - Whole wrapper

Node - A virtual machine

Pod - Container wrapper. There can be multiple containers inside a Pod

Deployment - Monitor set of Pods. If something down, then this will restart them to make sure it is running

Service - Provide nice URLs for containers

How to create a Pod directly?

Create Pod using configuration file - kubectl apply -f posts.yml

Note: This apply use for any type of cluster change

Example of posts.yml

apiVersion: v1

kind: Pod

metadata:

name: posts

spec:

containers:

- name: post

image: dilumdarshana/posts:0.0.1

Get all pods running - kubectl get pods

Delete Pods - kubectl delete -f posts.yml

General important Pod commands:

kubectl exec -it <pod name> <command>

kubectl logs <pod name>

kubectl delete pod <pod name>

kubectl describe pod <pod name>

How to create Pods via deployment ?

kubectl apply -f <deployement.yml>

Example of deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: posts-depl

spec:

replicas: 1

selector:

matchLabels:

app: posts

template:

metadata:

labels:

app: posts

spec:

containers:

- name: posts

image: dilumdarshana/posts:0.0.1

General Deployment commands:

kubectl get deployments

kubectl describe deployment <deployment name>

kubectl delete deployment <deployment name>

How to re deploy on docker image change?

Method 01:

- After change on file, rebuild the docker image with new tag: docker build -t dilumdarshana/posts:0.0.2 .

- Change the Kubenetes deployment config file to reflect new docker image

- Re deploy deployment: kubectl appy -f posts-depl.yml

Method 02:

In this way, we do not need to change deployment.yml file for docker image version change. Basically, we remove the docker image tag name from the file,

apiVersion: apps/v1

kind: Deployment

metadata:

name: posts-depl

spec:

replicas: 1

selector:

matchLabels:

app: posts

template:

metadata:

labels:

app: posts

spec:

containers:

- name: posts

image: dilumdarshana/posts

- Make changes on code repository and build docker image without specifying the tag version

docker build -t dilumdarshana/posts .

- Re deploy changes to cluster

kubectl rollout restart deployment <deployment name>

That's it :)

About Service. How to access docker container as a URL ?

There are 4 types of services,

1. Cluster IP* - For internal access

2. Node Port - For outside access

3. Load Balancer* - For outside access

4. External Name - For internal access

General Service commands:

kubectl apply -f <service file>

kubectl get services

kubectl describe service <service name>

NodePort:

Example service configuration file,

apiVersion: v1

kind: Service

metadata:

name: posts-srv

spec:

type: NodePort

selector:

app: posts

ports:

- name: posts

protocol: TCP

port: 4001

targetPort: 4001

ClusterIP:

Each Pod communicate them via Cluster IP service. Now, need to create a another deployment for Event Bus,

- Create docker image for Event Bus: docker build -t dilumdarshana/eventbus .

- Create a Kubenetes deployment:

Example event-bus-depl.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: event-bus-depl

spec:

replicas: 1

selector:

matchLabels:

app: event-bus

template:

metadata:

labels:

app: event-bus

spec:

containers:

- name: event-bus

image: dilumdarshana/event-bus

Deploy: kubectl apply -f event-bus-depl.yml

- Create a Cluster IP service

Update the same event bus deployment config file for the service section. Note that two sections can be separated by three dashes (---)

apiVersion: apps/v1

kind: Deployment

metadata:

name: event-bus-depl

spec:

replicas: 1

selector:

matchLabels:

app: event-bus

template:

metadata:

labels:

app: event-bus

spec:

containers:

- name: event-bus

image: dilumdarshana/event-bus

---

apiVersion: v1

kind: Service

metadata:

name: event-bus-srv

spec:

type: ClusterIP

selector:

app: event-bus

ports:

- name: event-bus

protocol: TCP

port: 5000

targetPort: 5000

Run: kubectl apply -f event-bus-depl.yml

Same as need to create a ClusterIP service for the post service as well. To do that let's edit post-depl.yml file,

apiVersion: apps/v1

kind: Deployment

metadata:

name: posts-depl

spec:

replicas: 1

selector:

matchLabels:

app: posts

template:

metadata:

labels:

app: posts

spec:

containers:

- name: posts

image: dilumdarshana/posts

---

apiVersion: v1

kind: Service

metadata:

name: posts-clusterip-srv

spec:

type: ClusterIP

selector:

app: posts

ports:

- name: posts

protocol: TCP

port: 4001

targetPort: 4001

Run: kubectl apply -f posts-depl.yml

All the service upto now should see like this on terminal,

How internal communications works?

Internal communications works using their service name instead of 'localhost', if there is any ClusterIP service type created. Example, event bus can be called using http://event-bus-srv:5000/XXX

Best way to communicate Pods with outside?

Using NodePort we can expose port to communicate with the outside. This would be a problematic, when service restart it will assign a completely different port that we need to update on Front-end application as well. Therefore, better option would be use Load Balancer Service instead.

Load balancer can be made using ingress nginx library,

Documentation: https://github.com/kubernetes/ingress-nginx

Install ingress controller:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/cloud/deploy.yaml

This service will be run on port 80. Therefore, need to make sure that port 80 is free.

sudo lsof -i TCP:80

Then need to create a ingress controller config file as bellow,

Example: ingress-srv.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-srv

spec:

ingressClassName: nginx

rules:

- host: posts.local

http:

paths:

- path: /posts

pathType: ImplementationSpecific

backend:

service:

name: posts-clusterip-srv

port:

number: 4001

Then, run this,

kubectl apply -f ingress-srv.yml

Now, http://posts.local/posts URL should work from the browser

All the paths should be included to the service file as bellow,

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-srv

annotations:

nginx.ingress.kubernetes.io/use-regex: "true"

spec:

ingressClassName: nginx

rules:

- host: posts.local

http:

paths:

- path: /posts/create

pathType: ImplementationSpecific

backend:

service:

name: posts-clusterip-srv

port:

number: 4001

- path: /posts

pathType: ImplementationSpecific

backend:

service:

name: query-srv

port:

number: 4003

- path: /posts/?(.*)/comments

pathType: ImplementationSpecific

backend:

service:

name: comments-srv

port:

number: 4002

- path: /?(.*)

pathType: ImplementationSpecific

backend:

service:

name: client-srv

port:

number: 3000

Note: path should be unique each other to identify in Nginx.

Every time, when there is a change on the repository, we need to rebuild docker image, push to docker hub and have to re run the deployment. This can be nightmare to handle manually. There is a tool to manage this automatically,

Install: https://skaffold.dev/docs/install/

Make sure that skaffold installed: skaffold from terminal

Skaffold config file need to put on the root of the all micro services. This this watching all the kubenetes file changes and apply them automatically. Create skaffold.yml

apiVersion: skaffold/v4beta3

kind: Config

manifests:

rawYaml:

- ./infra/k8s/*

build:

local:

push: false

artifacts:

- image: dilumdarshana/client

context: client

sync:

manual:

- src: src/**/*.js

dest: .

docker:

dockerfile: Dockerfile

- image: dilumdarshana/comments

context: comments

sync:

manual:

- src: "*.js"

dest: .

docker:

dockerfile: Dockerfile

- image: dilumdarshana/event-bus

context: event-bus

sync:

manual:

- src: "*.js"

dest: .

docker:

dockerfile: Dockerfile

- image: dilumdarshana/moderation

context: moderation

sync:

manual:

- src: "*.js"

dest: .

docker:

dockerfile: Dockerfile

- image: dilumdarshana/posts

context: posts

sync:

manual:

- src: "*.js"

dest: .

docker:

dockerfile: Dockerfile

- image: dilumdarshana/query

context: query

sync:

manual:

- src: "*.js"

dest: .

docker:

dockerfile: Dockerfile

Run: skaffold dev

When you break the process, skaffold will kill all the running pods, deployments and services

How set environment variables?

kubectl create secret generic jwt-secret --from-literal=jwt=<secret key>

This will create a secrete object in the kubenetes cluster. We can use it from the Pod by specifying it in the deployment config file as bellow,

xxx-depl.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: auth-depl

spec:

replicas: 1

selector:

matchLabels:

app: auth

template:

metadata:

labels:

app: auth

spec:

containers:

- name: auth

image: dilumdarshana/auth

env:

- name: JWT_KEY

valueFrom:

secretKeyRef:

name: jwt-secret

key: JWT_KEY

Then the variable can be access from the code using process.env.JWT_KEY

Whole designed application in in nut shell

Comments

Post a Comment